-

Smart AI Cloud Platform

Smart AI Cloud Platform

A business-level platform for developing and running AI, built with AI as its main focus and using the latest cloud technology. It integrates strong computing power, easy management, a user-friendly development environment, tools for managing models throughout their life-cycle, and solid security. The platform helps companies solve problems related to efficiency, cost, engineering, and reliability when using AI, making it a strong support for large-scale AI projects.

-

DataCanvas Alaya NeWA full-featured, cloud-based platform specifically built for AI and large language model (LLM) projects. It covers everything needed for developing and producing AI applications.

DataCanvas Alaya NeWA full-featured, cloud-based platform specifically built for AI and large language model (LLM) projects. It covers everything needed for developing and producing AI applications. -

AI Data Center OSThe fundamental system for managing large amounts of AI computing resources. It funtions as a "super scheduler" that optimizes the utilization of AI computing power..

AI Data Center OSThe fundamental system for managing large amounts of AI computing resources. It funtions as a "super scheduler" that optimizes the utilization of AI computing power..

-

-

Developer Center

Developer Center

Developer Center is a one-stop platform designed specifically for AI developers, with the core vision of “Building industrial-grade AI as easy as stacking blocks.” The platform deeply integrates advanced AI infrastructure and open-source technology ecosystem, providing full-process support for developers—from environment setup, model development, deployment to community collaboration.

-

Open-Source SuiteThe open-source suite includes DingoDB (multi-modal vector database), YLearn (causal learning software), and DAT (automated machine learning software).

Open-Source SuiteThe open-source suite includes DingoDB (multi-modal vector database), YLearn (causal learning software), and DAT (automated machine learning software). -

Key Open-Source ProductsFocus on improving AI development efficiency and engineering, building the DataCanvas open-source ecosystem.

Key Open-Source ProductsFocus on improving AI development efficiency and engineering, building the DataCanvas open-source ecosystem.

-

-

Technology Exploration

Technology Exploration

Breaks traditional technology paradigms by exploring cloud-native AI integrated architectures.

-

National and Industry StandardsDataCanvas drives industry transformation with cutting-edge technology breakthroughs, possessing full-stack capabilities to support enterprise-level large model applications. The company actively participates in formulating multiple national and industry standards.

National and Industry StandardsDataCanvas drives industry transformation with cutting-edge technology breakthroughs, possessing full-stack capabilities to support enterprise-level large model applications. The company actively participates in formulating multiple national and industry standards. -

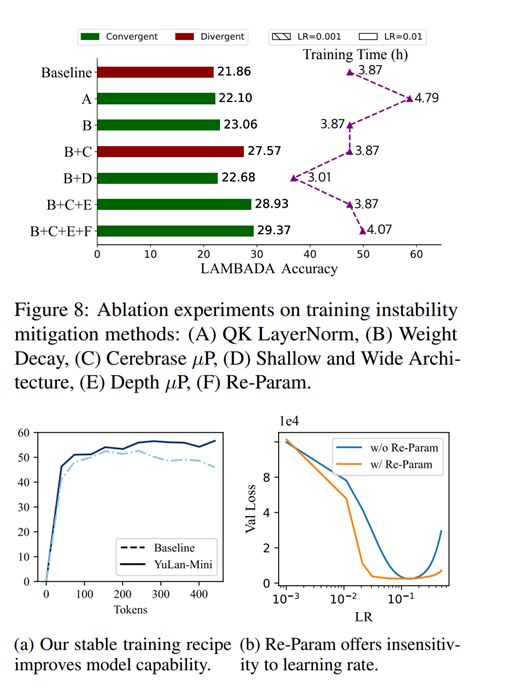

Publications and PatentsConsistently achieves breakthroughs at top international academic conferences and journals over the years, with more than 10 papers published at prominent AI conferences such as ICLR, and holds over 400 independent intellectual property rights.

Publications and PatentsConsistently achieves breakthroughs at top international academic conferences and journals over the years, with more than 10 papers published at prominent AI conferences such as ICLR, and holds over 400 independent intellectual property rights.

-

-

Ecosystem Collaboration

Ecosystem Collaboration

DataCanvas builds an open and collaborative ecosystem, partnering with cloud vendors, domestic chip manufacturers, industry ISVs, and leading research institutions to deliver full-stack, innovative AI solutions. Through technological symbiosis, business win-win, and joint standard-setting, the company has implemented numerous benchmark cases, accelerating large-scale AI adoption across various industries.

-

News

News

DataCanvas News focuses on technological breakthroughs, business cooperation, industry honors and ecological dynamics, with real-time updates on key progress.

-

About Us

About Us

Positioning: Leading provider of AI infrastructure software, focused on cloud-native AI platforms and large model toolchains.

Technology: Proprietary core technologies, including the Alaya AI Data Center Operating System and Hypernets automated machine learning framework.

Achievements: Ranked in the TOP 4 of IDC's China AI platform market. Leading the development of national standards and serving multiple benchmark clients.

Mission: Promote the industrialization of AI in enterprises and build an open, win-win ecosystem.

-

Company OverviewDataCanvas is a leading provider of AI infrastructure and intelligent computing cloud services. Its notable brands include Alaya NeW Cloud and Alaya NeW OS, are widely acknowledged in the industry. The company delivers high-performance computing, intelligent cloud services, and AI software for both AI training and inference, empowering a broad range of AI developers and enterprise clients

Company OverviewDataCanvas is a leading provider of AI infrastructure and intelligent computing cloud services. Its notable brands include Alaya NeW Cloud and Alaya NeW OS, are widely acknowledged in the industry. The company delivers high-performance computing, intelligent cloud services, and AI software for both AI training and inference, empowering a broad range of AI developers and enterprise clients -

Key Technological MilestoneFounded in 2013, DataCanvas consistently achieving technological breakthroughs and earning widespread recognition.

Key Technological MilestoneFounded in 2013, DataCanvas consistently achieving technological breakthroughs and earning widespread recognition. -

HonorsGoverment Accreditation & Industry Recognition & Institutional Certification

HonorsGoverment Accreditation & Industry Recognition & Institutional Certification -

Authoritative CertificationsFull-stack innovation and the highest level of financial-grade security compliance

Authoritative CertificationsFull-stack innovation and the highest level of financial-grade security compliance -

Contact Usleo@zetyun.com

Contact Usleo@zetyun.com

-